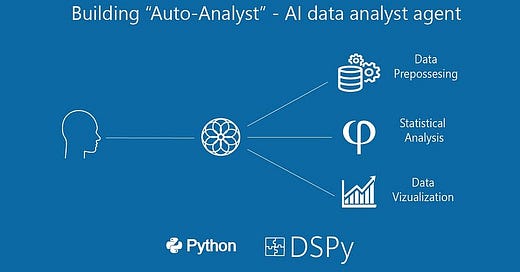

Building “Auto-Analyst” — A data analytics AI agentic system

A technical guide on making a AI ‘Auto-Analyst’

I’ve been developing AI-powered agents to reduce my workload as a data scientist/analyst. While pop culture often shows AI taking over human jobs, in reality, most AI agents aren’t replacements for humans. Instead, they help us work more efficiently. This agent is designed to do just that. Previously, I had designed a data visualization agent which helped me make visualizations faster, using only natural language inputs.

Design

The flow diagram illustrates a system that begins with a user-defined goal. The planner agent then delegates tasks to a group of worker agents, each responsible for generating code to address a specific part of the problem. Finally, all the individual pieces of code are gathered and integrated by a code combiner agent, resulting in a single, cohesive script that accomplishes the entire goal.

Note: The planner agent could delegate to some of the agents, not necessarily to all. Also, each agent would have its own set of inputs not shown in the diagram.

Want someone to design and build AI agents for you? Or having trouble with agents/RAG application you’re building. Feel free to reach out:

https://form.jotform.com/240744327173051

Individual components

This blog post will guide you step-by-step through building the agent, presenting code blocks for each individual component. In the following section, we’ll demonstrate how these parts seamlessly integrate.

Planner Agent

The planner agent takes three inputs, user-defined goal, the datasets available, and agents' descriptions. It outputs a plan in this format:

Agent1-> Agent2-> Agent3….

# You can use other orchestration libraries but I found DSPy

# good for building fast, simpler and evaluation (making the application more relibale)

import dspy

# This object inherits from the dspy.Signature class

# The text inside """ is the prompt

class analytical_planner(dspy.Signature):

""" You are data analytics planner agent. You have access to three inputs

1. Datasets

2. Data Agent descriptions

3. User-defined Goal

You take these three inputs to develop a comprehensive plan to achieve the user-defined goal from the data & Agents available.

In case you think the user-defined goal is infeasible you can ask the user to redefine or add more description to the goal.

Give your output in this format:

plan: Agent1->Agent2->Agent3

plan_desc = Use Agent 1 for this reason, then agent2 for this reason and lastly agent3 for this reason.

You don't have to use all the agents in response of the query

"""

# Input fields and their descriptions

dataset = dspy.InputField(desc="Available datasets loaded in the system, use this df_name,columns set df as copy of df_name")

Agent_desc = dspy.InputField(desc= "The agents available in the system")

goal = dspy.InputField(desc="The user defined goal ")

# Output fields and their description

plan = dspy.OutputField(desc="The plan that would achieve the user defined goal")

plan_desc= dspy.OutputField(desc="The reasoning behind the chosen plan")Analysis Agents

Most analysis agents share a common structure with slight variations in their prompts. They accept two inputs: the user-defined goal and the dataset index. They produce two outputs: the code for the analysis and commentary, which can be useful for debugging or redirecting the agent.

# I define analysis agents as those agents that are in the middle-layer

# they produce code for a specialised data analysis task

class preprocessing_agent(dspy.Signature):

""" You are a data pre-processing agent, your job is to take a user-defined goal and available dataset,

to build an exploratory analytics pipeline. You do this by outputing the required Python code.

You will only use numpy and pandas, to perform pre-processing and introductory analysis

"""

dataset = dspy.InputField(desc="Available datasets loaded in the system, use this df_name,columns set df as copy of df_name")

goal = dspy.InputField(desc="The user defined goal ")

commentary = dspy.OutputField(desc="The comments about what analysis is being performed")

code = dspy.OutputField(desc ="The code that does the data preprocessing and introductory analysis")

class statistical_analytics_agent(dspy.Signature):

""" You are a statistical analytics agent.

Your task is to take a dataset and a user-defined goal, and output

Python code that performs the appropriate statistical analysis to achieve that goal.

You should use the Python statsmodel library"""

dataset = dspy.InputField(desc="Available datasets loaded in the system, use this df_name,columns set df as copy of df_name")

goal = dspy.InputField(desc="The user defined goal for the analysis to be performed")

commentary = dspy.OutputField(desc="The comments about what analysis is being performed")

code = dspy.OutputField(desc ="The code that does the statistical analysis using statsmodel")

class sk_learn_agent(dspy.Signature):

# Prompt

"""You are a machine learning agent.

Your task is to take a dataset and a user-defined goal, and output Python code that performs the appropriate machine learning analysis to achieve that goal.

You should use the scikit-learn library."""

# Input Fields

dataset = dspy.InputField(desc="Available datasets loaded in the system, use this df_name,columns. set df as copy of df_name")

goal = dspy.InputField(desc="The user defined goal ")

# Output Fields

commentary = dspy.OutputField(desc="The comments about what analysis is being performed")

code = dspy.OutputField(desc ="The code that does the Exploratory data analysis")

## I worked on the data-viz agent and already optimized using DSPy.

## The only big difference is that this agents takes another input of styling index

Code Combiner Agent

The purpose of this agent is to clean the output from all the agents into one coherent script. It takes a long str of a list of code, and outputs code.

class code_combiner_agent(dspy.Signature):

""" You are a code combine agent, taking Python code output from many agents and combining the operations into 1 output

You also fix any errors in the code"""

agent_code_list =dspy.InputField(desc="A list of code given by each agent")

refined_complete_code = dspy.OutputField(desc="Refined complete code base")Optional Agents/Indexes

For the agent to work more smoothly and to catch some errors, I also built these additional agents or indexes.

# The same signature used in Data Viz agent post

class Data_Viz(dspy.Signature):

"""

You are AI agent who uses the goal to generate data visualizations in Plotly.

You have to use the tools available to your disposal

{dataframe_index}

{styling_index}

You must give an output as code, in case there is no relevant columns, just state that you don't have the relevant information

"""

goal = dspy.InputField(desc="user defined goal which includes information about data and chart they want to plot")

dataframe_context = dspy.InputField(desc=" Provides information about the data in the data frame. Only use column names and dataframe_name as in this context")

styling_context = dspy.InputField(desc='Provides instructions on how to style your Plotly plots')

code= dspy.OutputField(desc="Plotly code that visualizes what the user needs according to the query & dataframe_index & styling_context")

# An optional agent that checks if the user-defined goal works well

class goal_refiner_agent(dspy.Signature):

"""You take a user-defined goal given to a AI data analyst planner agent,

you make the goal more elaborate using the datasets available and agent_desc"""

dataset = dspy.InputField(desc="Available datasets loaded in the system, use this df_name,columns set df as copy of df_name")

Agent_desc = dspy.InputField(desc= "The agents available in the system")

goal = dspy.InputField(desc="The user defined goal ")

refined_goal = dspy.OutputField(desc='Refined goal that helps the planner agent plan better')Instead of feeding information about the whole dataset I also built a retriever that takes in information about the data available.

# I choose a LLama-Index based retriever as it was more convenient.

# Basically you can feed your data in a multiple ways.

# Providing description about column names, dataframe reference

# And also what purpose the data was collected etc.

dataframe_index = VectorStoreIndex.from_documents(docs)

# I also defined a styling index for the data visualization agent.

# Which has natural language instructions on how to style different visualizations

style_index = VectorStoreIndex.from_documents(styling_instructions)Putting everything together as one system

In DSPy, to compile a complex LLM application, you need to define a module with two essential methods: __init__ and forward.

The __init__ method initializes the module by defining all the variables that will be used throughout. The forward method, however, is where the core functionality is implemented. This method outlines how the outputs from one component interact with other components, effectively driving the application's logic.

# This module takes only one input on initiation

class auto_analyst(dspy.Module):

def __init__(self,agents):

# Defines the available agents, their inputs, and description

self.agents = {}

self.agent_inputs ={}

self.agent_desc =[]

i =0

for a in agents:

name = a.__pydantic_core_schema__['schema']['model_name']

# Using CoT prompting as from experience it helps generate better responses

self.agents[name] = dspy.ChainOfThought(a)

agent_inputs[name] ={x.strip() for x in str(agents[i].__pydantic_core_schema__['cls']).split('->')[0].split('(')[1].split(',')}

self.agent_desc.append(str(a.__pydantic_core_schema__['cls']))

i+=1

# Defining the planner, refine_goal & code combiner agents seperately

# as they don't generate the code & analysis they help in planning,

# getting better goals & combine the code

self.planner = dspy.ChainOfThought(analytical_planner)

self.refine_goal = dspy.ChainOfThought(goal_refiner_agent)

self.code_combiner_agent = dspy.ChainOfThought(code_combiner_agent)

# these two retrievers are defined using llama-index retrievers

# you can customize this depending on how you want your agents

self.dataset =dataframe_index.as_retriever(k=1)

self.styling_index = style_index.as_retriever(similarity_top_k=1)

def forward(self, query):

# This dict is used to quickly pass arguments for agent inputs

dict_ ={}

# retrieves the relevant context to the query

dict_['dataset'] = self.dataset.retrieve(query)[0].text

dict_['styling_index'] = self.styling_index.retrieve(query)[0].text

dict_['goal']=query

dict_['Agent_desc'] = str(self.agent_desc)

# output_dictionary that stores all agent outputs

output_dict ={}

# this comes up with the plan

plan = self.planner(goal =dict_['goal'], dataset=dict_['dataset'], Agent_desc=dict_['Agent_desc'] )

output_dict['analytical_planner'] = plan

plan_list =[]

code_list =[]

# if the planner worked as intended it should give agents seperated by ->

if plan.plan.split('->'):

plan_list = plan.plan.split('->')

# in case the goal is unclear, it sends it to refined goal agent

else:

refined_goal = self.refine_goal(dataset=data, goal=goal, Agent_desc= self.agent_desc)

forward(query=refined_goal)

# passes the goal and other inputs to all respective agents in the plan

for p in plan_list:

inputs = {x:dict_[x] for x in agent_inputs[p.strip()]}

output_dict[p.strip()]=self.agents[p.strip()](**inputs)

# creates a list of all the generated code, to be combined as 1 script

code_list.append(output_dict[p.strip()].code)

# Stores the last output

output_dict['code_combiner_agent'] = self.code_combiner_agent(agent_code_list = str(code_list))

return output_dict

# you can store all available agent signatures as a list

agents =[preprocessing_agent, statistical_analytics_agent, sk_learn_agent,data_viz_agent]

# Define the agentic system

auto_analyst_system = auto_analyst(agents)

# the system is preloaded with Chicago crime data

goal = "What is the cause of crime in Chicago?"

# Asking the agentic system to perform analysis for this query

output = auto_analyst_system(query = goal)Now looking at result of the query step by step.

For this query = ’What is the cause of crime in Chicago?’

Executing the plan, first preprocessing agent

Next statistical analysis agent

Next the Plotly data visualization agent

Finally, the code combiner agent, to put it all together

This is the output after executing the code from the last agent.

Seems cool, right? Want me to help you to design, implement and evaluate AI agents? Click here:

Limitations

Like many agents, it performs excellently when it works as intended. This is just the first iteration of a project I aim to improve over time. Please follow me and FireBird Technologies to stay updated. Here are the current limitations:

Hallucination: The agent sometimes produces inexecutable code due to hallucinations.

Unreliable/Inconsistent: The agent’s outputs are inconsistent, with different variations of the same query resulting in significantly different code.

Mixed Output: Many agents do not exclusively address separate aspects of the problem. For example, the data-preprocessing agent generates its own visualizations, while the data-visualization agent also creates its own.

Next Steps

This is an ongoing project; these are the steps I would likely take next to improve the agent

Optimize Signature/Prompt: DSPy is designed to evaluate LLM applications, this was just the implementation, next I would have to figure out the best prefix, signature & prompts.

Add Guardrails: Auto-fixing the code generated by the agent is a solution used in many other agentic systems. Trying to constrain prompt injection attacks is also on the roadmap

Add Memory/interaction: This agent does everything in one step, also there is no interaction between the individual components where they see each others outputs.

Build a UI: Right now I only built the agent backend for further testing and allowing user-feedback I would build a UI.

Thank you, for reading!

Please do subscribe to FireBirdTech